Efficient Rendering Study Notes

Forward Rendering

- Do everything we need to shade a pixelLauritzen10

- For each light

- Shadow attenuation (sampling shadow maps)

- Distance attenuation

- Evaluate lighting and accumulate

- Object rendering pass does everythingKimBarrero11

- Single pass over geometry generates “final” imagePesce14

- Lights are bound to draw calls (via uniforms)Pesce14

- Accurate culling of light influence on geometry requires CSG splitsPesce14

- Multiple lights require either loops / branches in the shaders or shader permutationsPesce14

Characteristics:

- Advantages:

- Transparency via alpha blendingOlssonBilleterAssarsson13Olsson15

- MSAA and related techniques through hardware features (much less memory storage is required)OlssonBilleterAssarsson13Olsson15

- Fastest in its baseline case (single light per pixel, “simple” shaders or even baked lighting)Pesce14

- Doesn’t have a “constant” up-front investment, you pay as you go (more lights, more textures…)

- Least memory necessary (least bandwidth, at least in theory). Makes MSAA possiblePesce14Olsson15

- Single frame bufferOlsson15

- Easy to integrate with shadowmaps (can render them one-at-atime, or almost)Pesce14

- No extra pass over geometryPesce14

- Any material, except ones that require screen-space passes like Jimenez’s SS-SSSPesce14

- Single passOlsson15

- Simple if only few lightsOlsson15

- e.g., the sun

- Varying shading models is easy

- FlexibleOlsson15

- Forward or Deferred

- Issues:

- Computing which lights affect each body consumes CPU time, and in the worst cast, it becomes an O(n × m) operationKoonce07, Ineffective light cullingLauritzen10, Light culling not efficientAndersson11Pesce14

- Object space at best

- Shaders often require more than one render pass to perform lighting, with complicated shaders requiring worst-case O(n) render passes for n lightsKoonce07Lauritzen10

- Adding new lighting models or light types requires changing all effect source filesKoonce07

- Lighting / texturing variations have to be dealt with dynamic branches which are often problematic for the shader compiler (must allocate registers for the worst case …), conditional moves(wasted work and registers) or shader permutations(combinatorial explosion)Pesce14

Shaders quickly encounter the instruction count limit of Shader Model 2.0Koonce07- Memory footprint of all inputsLauritzen10

- Everything must be resident at the same time

- Shading small triangles is inefficientLauritzen10

- Shader permutations not efficientAndersson11Pesce14Olsson15

- Expensive & more difficult decaling / destruction maskingAndersson11, Decals needs to be multiplass, lit twice.Pesce14

- Complex shaders might not run optimallyPesce14Olsson15

- Texturing and lighting (and shadowing) is done in the same pass, thus shaders can require a lot of registeres and yield limited occupancy

- Accessing many textures in sequence might create more trashing than accessing them in separate passes

- Many “modern” rendering effecs require a depth/normal pre-pass anyways (i.e. SSAO, screen-space shadwos, reflections, and so on)Pesce14

- All shading is done on geometry, which means we pay all the eventual inefficiencies (e.g. partial quads, overdraw) on all shadersPesce14Olsson15

- No shadow map reuseOlsson15

- Computing which lights affect each body consumes CPU time, and in the worst cast, it becomes an O(n × m) operationKoonce07, Ineffective light cullingLauritzen10, Light culling not efficientAndersson11Pesce14

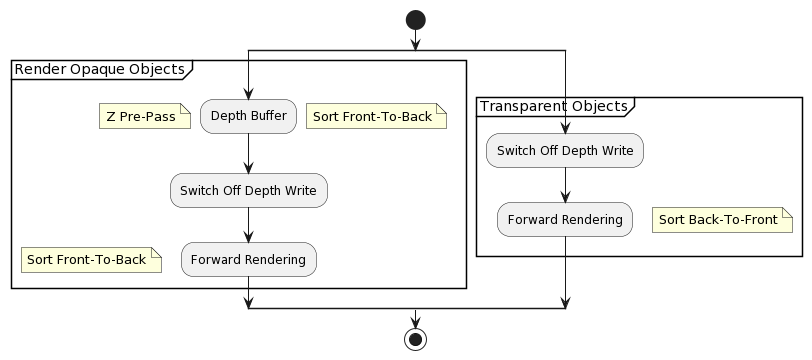

Classic forward rendering:StewartThomas13

- Depth pre-pass

- Prevents overdraw when shading

- Forward shading

- Pixel Shader

- Iterates through light list set for each object

- Evaluates material

- Diffuse texture, spec mask, bump map, etc.

- Pixel Shader

Modern Forward Shading:Olsson15

- Optional Pre-Z / Geometry Pass

- Light Assignment

- Build Light Acceleration Structure (Grid)

- Geometry Pass

- Just your normal shading pass

- For each fragment

- Look up light list in acceleration structure

- Loop over lights and accumulate shading

- Write shading to frame buffer

Z Pre-Pass rendering

Construct depth-only pass (Z pre-pass) first to fill the z buffer with depth data, and at the same time fill the z culling. Then render the scene using this occlusion data to prevent pixel overdraw.EngelShaderX709

A naïve multi-light solution that accompanies a Z pre-pass renderer design pattern would just render a limited number of lights in the pixel shader.EngelShaderX709

A more advanced approach stores light source properties such as position, light color, and other light properties in texture following a 2D grid that is laid out in the game world.EngelShaderX709

In order to render many lights:EngelSiggraph09

- Re-render geometry for each lightEngelSiggraph09

- Lots of geometry throughput

- Write pixel shader with four or eight lightsEngelSiggraph09

- Draw lights per-object

- Need to split up geometry following light distribution

- Store light properties in textures and index into this textureEngelSiggraph09

- Dependent texture look-up and lights are not fully dynamic

Space Marine:KimBarrero11

- Reject occluded objects early in G-Buffer

- Hi-Z to reject beofre ROP(Raster Operation)

- Front-to-back

- Only draw:

- maximum 75 objects

- Big enough objects in project space

- Other objects will be drawn to Z-buffer in Gbuffer pass

Unreal:Anagnostou17

- Uses reverse-Z

Lighting Pass

Single Pass Lighting

For each object:

Render mesh, applying all lights in one shader

For each object:

Find all lights affecting object

Render all lighting and material in a single shader

- Good for scenes with small number of lights (e.g. outdoor sunlight)Hargreaves04

- Difficult to organize if there are many lightsHargreaves04HargreavesHarris04Valient07

- Code generation can result in thousands of combiations for a single template shaderHargreavesHarris04Valient07

- Shader for each material vs. light setup combinationValient07

- Easy to overflow shader length limitationsHargreaves04

- Hidden surfaces can cause wasted shadingHargreavesHarris04Valient07

- Hard to integrate with shadowsHargreavesHarris04

- Stencil = No Go

- Shadow Maps = Easy to overflow VRAM

- All shadow maps have to be in memoryValient07

Multipass Lighting

For each light:

For each object affected by the light:

framebuffer += object * light

For each light:

For each object:

Add lighting from single light to frame buffer

- Worst case complexity is num_objects * num_lightsHargreaves04Lee09

- Sorting by light or by object are mutually exclusiveHargreaves04

- Hard to maintain good batching

- Ideally the scene should be split exactly along light boundaries, but getting this right for dynamic lights can be a lot of CPU workHargreaves04

- Hidden surfaces can cause wasted shadingHargreavesHarris04Valient07

- High batch cound (1/object/light)HargreavesHarris04

- Even higher if shadow-casting

- Lots of repeated work each pass:HargreavesHarris04Valient07Lee09Lauritzen10

- Vertex transform & setup

- Anisotropic filtering

- Not a scalable solutionLauritzen10

- Can only be justified when targeting graphics that generally consist of low- and medium-poly-count scenes with no complex materials, a very small number of light types, and where illumination comes from a few lights spread all over the scenePlaceres06

- Shader for each material and light typeValient07

- Hard to optimize, we were often vertex boundLee09

- High vertex processing costTrebilco09

Tiled Forward Shading

- Advantages:

- Light management is decoupled from geometryOlssonAssarsson11

- Light data can be uploaded to the GPU once per sceneOlssonAssarsson11

- FSAA works as expectedOlssonAssarsson11

- Common terms in the rendering equation can be factored outOlssonAssarsson11

- Light accumulation is done in register, at full floating point precisionOlssonAssarsson11

- Same shading function as Tiled DeferredOlssonAssarsson11

- Disadvantages:

- Each fragment may be shaded more than onceOlssonAssarsson11

- Can be addressed by using a pre-z pass

- Each fragment may be shaded more than onceOlssonAssarsson11

Basic AlgorithmOlssonBilleterAssarsson13

- Subdivide screen into tiles

- (Optional): pre-Z pass

- (Optional): find min / max z-bounds for each tile

- Assign lights to each tile

- Render geometry and compute shading for each generated fragment

// 1D texture holding per-tile light lists

uniform isampleBuffer tex_tileLightLists;

// uniform buffer holding each tile's light count and

// start offset of the tile's light list (in

// tex_tileLightIndices

uniform TileLightListRanges

{

ivec2 u_lightListRange[MAX_NUM_TILES];

}

void shading_function(inout FragmentData aFragData)

{

// ...

// find fragment's tile using gl_FragCoord

ivec2 tileCoord = ivec2(gl_FragCoord.xy) / ivec2(TILE_SIZE_X, TILE_SIZE_Y);

int tileIdx = tileCoord.x + tileCoord.y * LIGHT_GRID_SIZE_X;

// fetch tile's light data start offset (.y) and

// number of lights (.x)

ivec2 lightListRange = u_lightListRange[tileIdx].xy;

// iterate over lights affecting this tile

for (int i = 0; i < lightListRange.x; ++i)

{

int lightIndex = lightListRange.y + i;

// fetch global light ID

int globalLightId = texelFetch(tex_tileLightLists, lightIndex).x;

// get the light's data (position, colors, ...)

LightData lightData;

light_get_data(lightData, globalLightId);

// compute shading from the light

shade(aFragData, lightData);

}

// ...

}

Subdivision of Screen

- Regular N × N pixel tiles

Optional pre-Z Pass

- Required if we wish to find the Z-bounds for each tile

- In the final rendering pass, it can reduce the number of samples that need to be shaded through early-Z tests and similar hardware features

- Should only include opaque geometry

Optional Min / Max Z-Bounds

- Yields a further significant improvement

- Yields smaller per-tile bounding volumes

- Reduces the number of lights that affect a tile

Light Assignment

- CPU variant:

- Find the screen-space axis-aligned bounding boxes (AABBs) for each light source and loop over all the tiles that are contained in the 2D region of the AABB

- If min / max depth is available, perform additional test to discard lights that are outside of the tile in the Z-direction

- Find the screen-space axis-aligned bounding boxes (AABBs) for each light source and loop over all the tiles that are contained in the 2D region of the AABB

- GPU variant:

- Each tile gets its own thread group

Rendering and Shading

- For each generated sample,

- Look up which lights affect that sample by checking what lights are assigned to the sample’s tile

Transparency Support

// assign lights to 2D tiles

tilesD = build_2d_tiles();

lightLists2D = assign_lights_to_2d_tiles(tiles2D);

// draw opaque geometry in pre-Z pass and find tiles'

// extents in the Z-direction

depthBuffer = render_preZ_pass();

tileZBounds = reduce_z_bounds(tiles2D, depthBuffer);

// for transparent geometry, prune lights against maximum Z-direction

lightListsTrans = prune_lights_max(lightLists2D, tileZBounds);

// for opaque geometry additionally prune lights against

// minimum Z-direction

lightListsOpaque = prune_lights_min(lightListsTrans, tileZBounds);

// ...

// later: rendering

draw(opaque geometry, lightListsOpaque);

draw(transparent geometry, lightListsTrans);

Forward+ Rendering

- Goal:HaradaMcKeeYang13

- Materials may need to be both physically and nonphysically based

- Artists want complete freedom regarding the number of lights that can be placed in a scene at once

- Rendering data should be decoupled from the underlying rendering engine

Forward+:StewartThomas13

- Depth pre-pass

- Prevents overdraw when shading

- Provides tile depth bounds

- Separate depth prepass + depth buffer for transparentsNeubeltPettineo14

- May include vertex normal and velocityPettineo15

- Tiled light culling

- Compute shader

- Generates per-tile light list

- Transparent light list generated per-tileNeubeltPettineo14Pettineo15

- TileMinDepth = TileMin(transparentDepth)

- TileMaxDepth = TileMax(opaqueDepth)

- Culled using depth bufferPettineo15

- Async compute -> mostly freePettineo15

- Forward shading

- Pixel Shader

- Iterates through light list calculated by tiled light culling

- Evaluates material

- Diffuse texture, spec mask, bump map, etc.

- Pixel Shader

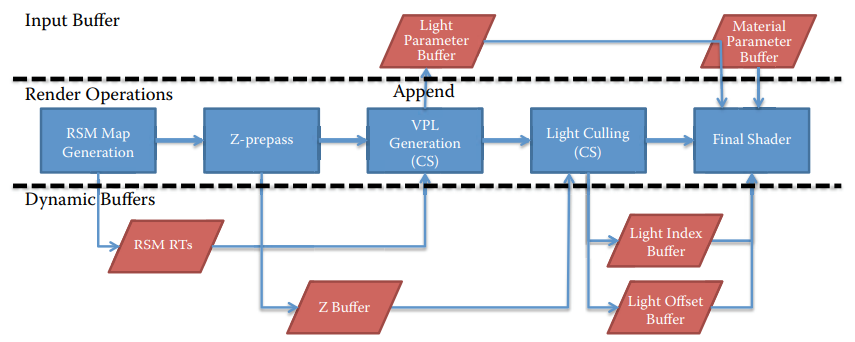

- Forward+ Light-culling stage before final shadingHaradaMcKeeYang12

- Stages:HaradaMcKeeYang12

- Depth Pre-Pass (Z prepassHaradaMcKeeYang13)

- Light CullingHaradaMcKeeYang13

- Final ShadingHaradaMcKeeYang13

- Advantages:

- Requires less memory traffic than compute-based deferred lightingHaradaMcKeeYang12

- Same memory as forward, more bandwidth, enables MSAAPesce14

- Any material (same as forward)Pesce14

- Compared to forward, no mesh splitting is necessary, much less shader permutations, less draw callsPesce14

- Compared to forward it handels dynamic lights with good cullingPesce14

- Requires less memory traffic than compute-based deferred lightingHaradaMcKeeYang12

- Disadvantages:

- Geometry submitted twiceStewartThomas13

- Small trianglesStewartThomas13

- Light occlusion culling requires a full depth pre-pass for a total of two geometrical passesPesce14

- Can be sidestepped with a clustered light grid

- All shadowmaps need to be generated upfront (more memory) or splatted in screen-space in a pre-pass

- All lighting permutations need to be addressed as dynamic branches in the shaderPesce14

- Not good if we need to support mnay kinds of light/shadow types

- Compared to forward, seems a steep price to pay to just get rid of geometry cuttingPesce14

- Even if this “solved” shader permutations, its solution is the same as doing forward with shaders that dynamic branch over light types/number of ligts and setting these parameters per draw call

Light Culling

- Similar to the light-accumulation step of deferred lightingHaradaMcKeeYang13

- Calculates a list of light indices overlapping a pixelHaradaMcKeeYang12 instead of lighting componentsHaradaMcKeeYang13

- However, per-pixel calculation has some issues:

- Memory footprint

- Efficiency of computation at light-culling stage

- However, per-pixel calculation has some issues:

- Split the screen into tiles and light indices are calculated on a per-tile basisHaradaMcKeeYang12

- Implemented using a single compute shaderStewartThomas13

- How to reduce false positives?

- Lights are too far away!

- 3D implementation uses too much memory

- 2.5 Culling!

Implementation

Gather Approach

- Thread group per tileHaradaMcKeeYang12StewartThomas13

- e.g.

[numthreads(16, 16, 1)]for 16 × 16 tile size

- e.g.

- Frustum of the tile is calculated using the range of the screen space of the tile and max/min depth values of the pixelsHaradaMcKeeYang12StewartThomas13

- Kernel first uses all the threads in a thread group to read a light to the local registerHaradaMcKeeYang12

- Overlap of the lights to the frustum of the tile is checked in parallel

- If overlaps, thread accumulates the light to TLS using local atomic operations

groupshared uint ldsLightIdx[MAX_NUM_LIGHTS_PER_TILE]StewartThomas13

- Flushes lights to the global memory using all threadsStewartThomas13

RWBuffer<uint> g_PerTileLightIndexBufferOut : register(u0);StewartThomas13

- 256 lights are culled in parallel (for 16 × 16 tile size)StewartThomas13

- Simple and effective if the number of lights is not too largeHaradaMcKeeYang12

// GET_GROUP_IDX: thread group index in X direction (SV_GroupID)

// GET_GROUP_IDY: thread group index in Y direction (SV_GroupID)

// GET_GLOBAL_IDX: global thread index in X direction (SV_DispatchThreadID)

// GET_GLOBAL_IDY: global thread index in Y direction (SV_DispatchThreadID)

// GET_LOCAL_IDX: local thread index in X direction (SV_GroupThreadID)

// GET_LOCAL_IDY: local thread index in Y direction (SV_GroupThreadID)

// No global memory write is necessary until all lights are tested

groupshared u32 ldsLightIdx[LIGHT_CAPACITY]; // Light index storage

groupshared u32 ldsLightIdxCounter; // Light index counter for the storage

void appendLightToList(int i)

{

u32 dstIdx = 0;

InterlockedAdd(ldsLightIdxCounter, 1, dstIdx);

if (dstIdx < LIGHT_CAPACITY)

{

ldsLightIdx[dstIdx] = i;

}

}

...

// 1: computation of the frustum of a tile in view space

float4 frustum[4];

{ // construct frustum

float4 v[4];

// projToView:

// takes screen-space pixel indices and depth value

// returns coordinates in view space

v[0] = projToView(8 * GET_GROUP_IDX, 8 * GET_GROUP_IDY, 1.f);

v[1] = projToView(8 * (GET_GROUP_IDX + 1), 8 * GET_GROUP_IDY, 1.f);

v[2] = projToView(8 * (GET_GROUP_IDX + 1), 8 * (GET_GROUP_IDY + 1), 1.f);

v[3] = projToView(8 * GET_GROUP_IDX, 8 * (GET_GROUP_IDY + 1), 1.f);

float4 o = make_float4(0.f, 0.f, 0.f, 0.f);

for (int i = 0; i < 4; ++i)

{

// createEquation:

// Creates a plane equation from three vertex positions

frustum[i] = createEquation(o, v[i], v[(i + 1) & 3]);

}

}

...

// 2: clip the frustum by using the max / min depth values of the pixels in the tile

float depth = depthIn.Load(uint3(GET_GLOBAL_IDX, GET_GLOBAL_IDY, 0));

float4 viewPos = projToView(GET_GLOBAL_IDX, GET_GLOBAL_IDY, depth);

int lIdx = GET_LOCAL_IDX + GET_LOCAL_IDY * 8;

{ // calculate bound

if (lIdx == 0) // initialize

{

ldsZMax = 0; // max z coordinates

ldsZMin = 0xffffffff; // min z coordinates

}

GroupMemoryBarrierWithGroupSync();

u32 z = asuint(viewPos.z);

if (depth != 1.f)

{

AtomMax(ldsZMax, z);

AtomMin(ldsZMin, z);

}

GroupMemoryBarrierWithGroupSync();

maxZ = asfloat(ldsZMax);

minZ = asfloat(ldsZMin);

}

...

// 3: cull lights

// 8 x 8 thread group is used, thus 64 lights are processed in parallel

for (int i = 0; i < nBodies; i += 64)

{

int il = lIdx + i;

if (il < nBodies)

{

// overlaps:

// light-geometry overlap check using separating axis theorem

if (overlaps(frustum, gLightGeometry[i]))

{

// appendLightToList

// Store light index to the lsit of the overlapping lights

appendLightToList(il);

}

}

}

...

// 4: fill the light indices to the assigned contiguous memory of gLightIdx using all the threads in a thread group

{ // write back

u32 startOffset = 0;

if (lIdx == 0)

{ // reserve memory

if (ldsLightIdxCounter != 0)

{

InterlockedAdd(gLightIdxCounter, ldsLightIdxCounter, startOffset);

ptLowerBound[tileIdx] = startOffset;

ldsLightIdxStart = startOffset;

}

GroupMemoryBarrierWithGroupSync();

startOffset = ldsLightIdxStart;

for (int i = lIdx; i < ldsLightIdxCounter; i += 64)

{

gLightIdx[startOffset + i] = ldsLightIdx[i];

}

}

}

Scatter Approach

- Computes which tile a light overlaps and writes the light-and tile-index data to a bufferHaradaMcKeeYang12

- Thread per lightHaradaMcKeeYang12

- The data of the buffer (ordered by light index at this point) needs to be sorted by tile indexHaradaMcKeeYang12

- We want a list of light indices per tile

- Radix sort

- Run kernels to find the start and end offsets of each tile in the buffer

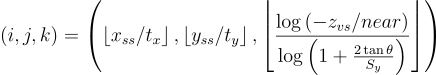

2.5 CullingHarada12

- Additional memory usage

- 0B global memory

- 4B local memory per work group

- Additional computation complexity

- A few bit and arithmetic instructions

- A few lines of codes for light culling

- No changes for other stages

- Additional runtime overhead

- < 10% compared to the original light culling

IDEA:

- Split frustum in z direction

- Uniform split for a frustum

- Varying split among frustums

FRUSTUM CONSTRUCTION:

- Calculate depth bound

- max/min values of depth

- Split depth direction into 32 cells

- min value and cell size

- Flag occupied cell

- 32 bit depth mask per work group

LIGHT CULLING:

- If a light overlaps to the frustum

- Calculate depth mask for the light

- Check overlap using the depth mask of the frustum

- Depth mask & Depth mask

1: frustum[0-4] ← Compute 4 planes at the boundary of a tile

2: z ← Fetch depth value of the pixel

3: ldsMinZ ← atomMin(z)

4: ldsMaxZ ← atomMax(z)

5: frustum[5, 6] ← Compute 2 planes using ldsMinZ, ldsMaxZ

6: depthMaskT ← atomOr(1 << getCellIndex(z))

7: for all the lights do

8: iLight ← lights[i]

9: if overlaps(iLight, frustum) then

10: depthMaskL ← Compute mask using light extent

11: overlapping ← depthMaskT ∧ depthMaskL

12: if overlapping then

13: appendLight(i)

14: end if

15: end if

16: end for

17: flushLightIndices()

Shading

- Goes through the list of lights and evaluates materials using information stored for each lightHaradaMcKeeYang12

- High pixel overdraw can kill performanceHaradaMcKeeYang12

- Depth Pre-Pass is critical

#define LIGHT_LOOP_BEGIN

int tileIndex = GetTileIndex(screenPos);

uint startIndex;

uint endIndex;

GetTileOffsets(tileIndex, startIndex, endIndex);

for (uint lightListIdx = startIdx; lightListIdx < endIdx; ++lightListIdx)

{

int lightIdx = LightIndexBuffer[lightListIdx];

LightParams directLight;

LightParams indirectLight;

if (isIndirectLight(lightIdx))

{

FetchIndirectLight(lightIdx, indirectLight);

}

else

{

FetchDirectLight(lightIndex, directLight);

}

#define LIGHT_LOOP_END

}

...

float4 PS( PSInput i ) : SV_TARGET

{

float3 colorOut = 0;

#LIGHT_LOOP_BEGIN

colorOut += EvaluateMicrofacet(directLight, indirectLight);

#LIGHT_LOOP_END

return float4(colorOut, 1.f);

}

Render PassesHaradaMcKeeYang13

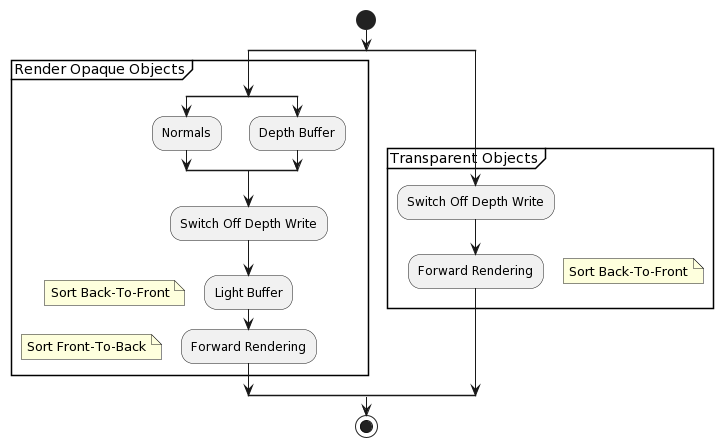

One-Bounce Indirect IlluminationHaradaMcKeeYang13

- Generate a reflective shadow map(RSM) of the scene from the point of view of the light

- Normal buffer, color buffer, world-space buffers are generated

- CS is executed to create spotlights at the location captured in the RSM

- Generated spotlights are appended to the main light list

Forward++ RenderingStewartThomas13

- Alpha Blended Geometry

- Shadow Casting Lights

- Global Illumination using VPLs

- Optimizations for depth discontinuities

Alpha Blended Geometry

- Can’t use the opaque scene’s light lists

- Frustum’s z extent was based on the opaque z-buffer

- Render blended geometry to new z-buffer

- Compute new set of tiled light lists

- minZ calculated from blended z-buffer

- maxZ calculated from opaque z-buffer

- Render blended scene using new light lists

- Geometry rendered using two-sided lighting

- Suitable for window glass & game objects

- Particle systems are better off using a custom method

- Calculating list of lights per emitter

- Lighting in vertex shader

- Two-sided lighting:

- Lighting func that accumulates lighting for front and back faces

Shadow Casting Lights

- Render shadow maps

- 2D Texture Atlas or Texture Array

- For each shadow casting lights, store shadow map index in alpha channel of light color

- Use dynamic branch in forward lighting pass to compute shadow term

// global list of lights (shadow casting + non-shadow casting)

uint shadowIndex = uint(g_PointLightColor[lightIndex].a * 255.0);

if (shadowIndex < 255) // is it shadow casting?

{

// Point light

int face = DirectionToCubeMapFace(lightDirection);

// pixel position to light space where the cube map faces

float4 texCoord = mul(float4(position, 1), g_ShadowViewProj[shadowIndex][face]);

texCoord.xyz /= texCoord.w;

texCoord.xy = 0.5 * texCoord.xy + 0.5;

// undersample per face

texCoord.xy *= g_ShadowScaleAndBias.xx;

texCoord.xy *= g_ShadowScaleAndBias.yy;

// set texture coordinates in the atlas

texCoord.xy += float2(face, shadowIndex);

texCoord.xy *= rcp(float2(6, MAX_POINT_LIGHT_SHADOWS));

texCoord.z -= g_ShadowZBias;

// hardware PCF

shadowTerm = FilterShadow(g_PointLightShadowAtlas, texCoord.xyz);

}

- Only update shadow maps if necessary

- If geometry has changed

- If lighting position has changed

- Spread cost of update over multiple frames

- Be mindful of multi GPU scenarios

- Index into projected texture in similar manner to shadow maps

- Bit pack the index along with the shadow map index

Depth Discontinuities

- Reduce false positives at depth discontinuities

- Two methods presented

- Split depth range in two at halfway point

- Keep two light lists per tile (one for each depth region)

- In the forward shading pass, each pixel determines which list to use

- 2.5D, partition depth range into 32 cells

- Determine the cell for each pixel in the tile

- Make a bit mask representing which cells are occupied in that tile

- Each light gets a similar bit mask (easy for spheres)

- Logical AND the light bit mask with the tile bit mask

- Split depth range in two at halfway point

Clustered Forward+Leadbetter14

- Avoids the need for a depth pre-pass by calculating light lists at multiple depths for each sub-rectangle and using the most appropriate cluster during surface shading.

Deferred Rendering

Goal:

Q: Why deferred rendering?

A: Combine conventional rendering techniques with the advantages of image space techniquesCalver03

- Advantages:

- Lights major cost is based on the screen area coveredCalver03, Predictable performance proportional to the lights’ screen-space areasShishkovtsov05Valient07

- All lighting is per-pixel and all surfaces are lit equallyCalver03Thibieroz04

- Lights can be occluded like other objects, this allows fast hardware Z-RejectCalver03

- Shadow mapping is fairly cheapCalver03

- Easily integrates with popular shadow techniquesHargreavesHarris04Placeres06

- Excellent batchingHargreaves04, Greatly simplifies batchingHargreavesHarris04, Cuts down on large numbers of batchesShishkovtsov05

- Render each triangle exactly onceHargreaves04, Only a single geometry pass is requiredThibieroz04Lee09Thibieroz11, Executes only texturing on geometry so it suffers less from partial quads, overdrawPesce14Olsson15

- Shade each visible pixel exactly onceHargreaves04, “Perfect” O(1) depth complexity for lightingHargreavesHarris04Thibieroz04, Perfect depth complexity for lightingShishkovtsov05Placeres06KnightRitchieParrish11Olsson15

- Easy to add new types of lighting shaderHargreaves04Koonce07

- Other kinds of postprocessing(blur, heathaze) are just special lights, and fit neatly into the existing frameworkHargreaves04, Simplifies rendering of multiple special effectsPlaceres06, G-Buffer already produces data required for post-processingThibieroz11

- Simple engine managementHargreavesHarris04Shishkovtsov05

- Lots of small lights ~ one big lightHargreavesHarris04HargreavesHarris04

- Forward can do it too!OlssonBilleterAssarsson13

- Reduces CPU usageShishkovtsov05

- Lighting costs are independent of scene complexityKoonce07Stewart15, Adding more layers of effects generally results in a linear, fixed cost per frame for additional full-screen post-processing passes regardless of the number of models on screenFilionMcNaughton08EngelShaderX709EngelSiggraph09Kaplanyan10KnightRitchieParrish11Thibieroz11

- No additional render passes on geometry for lighting, resulting in fewer draw calls and fewer state changes required to render the sceneKoonce07EngelSiggraph09Thibieroz11, Less draw calls, less shader permutations, one or few lighting shaders that can be hand-optimized wellPesce14Schulz14

- Material shaders do not perform lighting, freeing up instructions for additional geometry processingKoonce07

- Simpler shadersValient07

- More complex materials can be implementedLee09

- Not all buffers need to be updated with matching data, e.g., decal tricks

- Faster lightingKnightRitchieParrish11

- Decouples texturing from lightingPesce14Stewart15

- Potentially can be faster on complex shadersPesce14

- Allows volumetric or multipass decals (and special effects) on the G-Buffer (without computing the lighting twice)Pesce14

- Allows full-screen material passes like analytic geometric specular antialiasing (pre-filtering), which really works only done on the G-BufferPesce14

- Fails in forward on all hard edges (split normals), and screen-space subsurface scatteringPesce14

- Trivial light managementOlsson15

- Enables many lights

- Simple (light) shader managementOlsson15

- Shadow map reuseOlsson15

- Disadvantages:

- Large frame-buffer sizeCalver03, Framebuffer bandwidth can easily get out of handHargreaves04Placeres06EngelSiggraph09Kaplanyan10Thibieroz11OlssonBilleterAssarsson13Pesce14Olsson15

- Potentially high fill-rateCalver03Placeres06Kaplanyan10Lauritzen10OlssonBilleterAssarsson13StewartThomas13Pesce14Olsson15Arntzen20

- Reading lighting inputs from G-Buffer is an overheadLauritzen10

- Accumulating ligthing with additive blending is an overheadLauritzen10

- Requires high precisionOlsson15

- Multiple light equations difficultCalver03, Forces a single lighting model across the entire scene (everything has to be 100% per-pixel)Hargreaves04

High hardware specificationsCalver03- Transparency is very hardCalver03, Alpha blending is a nightmare!Hargreaves04Placeres06Valient07Kaplanyan10OlssonAssarsson11, Forward rendering required for translucent objectsThibieroz11OlssonBilleterAssarsson13Pesce14Olsson15Arntzen20

- If a tiled or clustered deferred is used, the light information can be passed to a forward+ pass for transparencies

- Can’t take advantage of hardware multisamplingHargreaves04 AA is problematicHargreavesHarris04Placeres06 MSAA difficult compared to Forward RendererEngelSiggraph09Kaplanyan10OlssonAssarsson11, Costly and complex MSAAThibieroz11StewartThomas13

- MYTH!! MSAA did not prove to be an issue!!Valient07

- Existing multi-sampling techniques are too heavy for deferred pipelineKaplanyan10

- Post-process antialiasing doesn’t remove aliasing completelyKaplanyan10

- Need to super-sample in most cases

Not good approach for older hardwareHargreaves04Not good when you have many directional lightsHargreavesHarris04Shading complexity will be O(R * L) (R = screen resolution, L = lights)- MYTH!!Shishkovtsov05

- Recalculate full lighting equation for every lightEngelSiggraph09

- Limited material representation in G-BufferEngelSiggraph09, Limited materials variationsKaplanyan10Pesce14

- MYTH?Lee09

- Only Phong BRDF (normal + glossiness)Kaplanyan10

- No aniso materialsKaplanyan10

- Can’t do lighting computations per object/vertex (i.e. GI), needs to pass everything per pixel in the G-BufferPesce14

- Alternative: store baked data in a voxel structured

- Accessing lighting related textures (gobos, cubemaps) might be less cache-coherentPesce14

- All lights (that cast shadows) must have their shadow maps built before the shading passOlssonAssarsson11

- Significant engine reworkThibieroz11

- In general it has lots of enticing benefits over forward, and it -might- be faster in complex lighting / material / decal scenarios, but the baseline simple lighting/shading case is much more expensivePesce14

- Difficult to do multiple shading modelsOlsson15

- Custom shaders

- No forward shading supportArntzen20

- No volumetric lightingArntzen20

For each object:

Render to multiple targets

For each light:

Apply light as a 2D postprocess

For each object:

Render surface properties into the G-Buffer

For each light and lit pixel

Use G-Buffer to compute lighting

Add result to frame buffer

Traditional deferred shading:Andersson09

- Graphics pipeline rasterizes gbuffer for opaque surfaces

- Normal, albedos, roughness, etc.

- Render scene geometry into G-Buffer MRTStewartThomas13

- Store material properties (albedo, specular, normal, etc.)

- Write to depth buffer as normal

- Light sources are rendered & accumulate lighting to a texture (accumulation buffer)StewartThomas13

- Light volume or screen-space tile rendering

- Use G-Buffer RTs as inputsStewartThomas13

- Render geometries enclosing light areaStewartThomas13

- Combine shading & lighting for final output

Modern Deferred Shading:Olsson15

- Render Scene to G-Buffers

- Light Assignment

- Build Light Acceleration Structure (Grid)

- Full Screen Pass

- Quad (or CUDA, or Compute Shaders, or SPUs)

- For each pixel

- Fetch G-Buffer Data

- Look up light list in acceleration structure

- Loop over lights and accumulate shading

- Write shading

- Worst case complexity is num_objects + num_lightsHargreaves04

- Perfect batchingHargreaves04

- Many small lights are just as cheap as a few big onesHargreaves04

- On MMO, given the lack of control of the game environment and the poort scalability of lighting costs within a forward renderer, deferred-shading renderer is preferableKoonce07

- Object rendering pass saves all surface parametersKimBarrero11

- Lighting pass saves lighting resultKimBarrero11

- Combiner pass combines lighting result + surface material in screen spaceKimBarrero11

G-Buffers

G-Buffers are 2D images that store geometric details in a texture, storing positions, normals and other details at every pixel. The key ingredient to hardware acceleration of G-Buffers is having the precision to store and process data such as position on a per-pixel basis. The higher precision we have to store the G-Buffer at, the slower the hardware renders.Calver03

Thin G-Buffer

The smaller the better!Kaplanyan10

- Crysis 3:SousaWenzelRaine13

- Minimize redundant drawcalls

- AB details on G-Buffer with proper glossiness

- Tons of vegetation → Deferred translucency

- Multiplatform friendly

G-Buffer encoding requirements:Pesce15

- Fast when implemented in a shader

- As compact as possible

- Makes sense under linear interpolation (hardware “blendable”, for pixel-shader based decals)

- As stable as possible, and secondarily as precise as possible

Advantages:

- Unified solution across all platformsSousaWenzelRaine13

- Deferred Rendering for less BW/Memory than vanillaSousaWenzelRaine13

- Good for MSAA + avoiding tiled rendering on Xbox360

- Tackle glossiness for transparent geometry on G-Buffer

- Alpha blended cases, e.g. Decals, Deferred Decals, Terrain Layers

- Can composite all such cases directly into G-Buffer

- Avoid need for multipass

- Deferred sub-surface scattering

- Visual + performance win, in particular for vegetation rendering

What to Store?

Depth

Calver03Hargreaves04HargreavesHarris04Thibieroz04Placeres06FilionMcNaughton08EngelShaderX709EngelSiggraph09Lee09LobanchikovGruen09Kaplanyan10KnightRitchieParrish11Thibieroz11Moradin19Huseyin20Pesce20

Use depth data to reconstruct position data. Provided by the depth buffer.

Format Suggestion:

- 24bppKaplanyan10

D32Huseyin20- Reveresed-Z

- In GBuffer,

G_Buffer.z = length(Input.PosInViewSpace); - In VS,

out.vEyeToScreen = float3(Input.ScreenPos.x * ViewAspect, Input.ScreenPos.y, invTanHalfFOV); - In PS,

float3 PixelPos = normalize(Input.vEyeToScreen) * G_Buffer.z;Placeres06

float3 vViewPos.xy = INTERPOLANT VPOS * half2(2.0f, -2.0f) + half2(-1.0f, 1.0f)) * 0.5 * p vCameraNearSize * p vRecipRenderTargetSize;

vViewPos.zw = half2(1.0f, 1.0f);

vViewPos.xyz = vViewPos.xyz * fSampledDepth;

float3 vWorldPos = mul(p_mInvViewTransform, vViewPos).xyz;

// input SV_POSITION as pos2d

New_pos2d = ((pos2d.xy) * (2 / screenres.xy)) - float2(1, 1);

viewSpacePos.x = gbuffer_depth * tan(90 - HORZFOV/2) * New_pos2d.x;

viewSpacePos.y = gbuffer_depth * tan(90 - VERTFOV/2) * New_pos2d.y;

viewSpacePos.z = gbuffer_depth;

Stencil

Format Suggestion:

- 8bppHuseyin20

Stencil to mark objects in lighting groupsKaplanyan10

- Portals / indoors

- Custom environment reflections

- Different ambient and indirect lighting

Normal

Calver03Hargreaves04HargreavesHarris04Thibieroz04Placeres06Andersson09EngelShaderX709EngelSiggraph09Lee09LobanchikovGruen09Kaplanyan10KnightRitchieParrish11Thibieroz11Huseyin20Pesce20

Format Suggestions:

R10G10B10A2_FLOATHargreaves04Pesce20- 2-bit alpha reserved to mark hairPesce20

U10V10W10A2Thibieroz04,U8V8W8Q8Thibieroz04- 24bppKaplanyan10

- Too quantized

- Lighting is banded / of low quality

- RGBA8_UNORMHuseyin20

Considerations:

- Model space vs Tangent spaceThibieroz04

Optimizations:

- Reconstruct z from xy(z = sqrt(1 - x2 - y2))Hargreaves04HargreavesHarris04Placeres06

- If all the lighting is performed in view space, then the front-faced polygons are always gonig to have negative or positive Z componentsPlaceres06

Packing:

float2 pack_normal(float3 norm)

{

float2 res;

res = 0.5 * (norm.xy + float2(1, 1));

res.x *= (norm.z < 0 ? -1.0 : 1.0);

return res;

}

Unpacking:

float3 unpack_normal(float2 norm)

{

float3 res;

res.xy = (2.0 * abs(norm)) - float2(1, 1);

res.z = (norm.x < 0 ? -1.0 : 1.0) * sqrt(abs(1 - res.x * res.x - res.y * res.y));

return res;

}

Crytek:

- Because we are storing normalized normals, we are wasting 24bpp.Kaplanyan10

- Create a cube of 2563 values, and find the quantized value with the minimal error for a ray. Bake this into a cubemap of results.Kaplanyan10

- Extract the most meaningful and unique part of this symmetric cubemap

- Save into 2D texture

- Look it up during G-Buffer generation

- Scale the normal

- Output the adjusted normal into G-Buffer

- However, not “blendable”Pesce15

Baseline: XYZ

- Store all three components of the normalPranckevicius09

// Encoding

half4 encode(half3 n, float3 view)

{

return half4(n.xyz * 0.5 + 0.5, 0);

}

// Decoding

half3 decode(half4 enc, float3 view)

{

return enc.xyz * 2.0 - 1.0;

}

Octahdral Normal VectorsCigolleDonowEvangelakosMaraMcGuireMeyer14

Map the sphere to an octahedron, project down into the z = 0 plane, and the reflect the -z-hemisphere over the appropriate diagonal.

// float3 to oct

// returns ±1

float2 signNotZero(float2 v)

{

return float2((v.x >= 0.0) ? +1.0 : -1.0, (v.y >= 0.0) ? +1.0 : -1.0);

}

// assume normalized input. output is on [-1, 1] for each component

float2 float3ToOct(float2 v)

{

// project the sphere onto the octahedron, and then onto the xy plane

float2 p = v.xy * (1.0 / (abs(v.x) + abs(v.y) + abs(v.z)));

// reflect the folds of the lower hemisphere over the diagonals

return (v.z <= 0.0) ? ((1.0 - abs(p.yx)) * signNotZero(p)) : p;

}

// oct to float3

float3 octToFloat3(float2 e)

{

float3 v = float3(e.xy, 1.0 - abs(e.x) - abs(e.y));

if (v.z < 0)

{

v.xy = (1.0 - abs(v.yx)) * signNotZero(v.xy);

}

return normalize(v);

}

Diffuse Albedo

Calver03Hargreaves04HargreavesHarris04Thibieroz04Andersson09EngelShaderX709EngelSiggraph09Lee09LobanchikovGruen09KnightRitchieParrish11Thibieroz11Moradin19Huseyin20Pesce20

Format Suggestions:

R8G8B8A8Hargreaves04Thibieroz04RGBA8_SRGBHuseyin20R10G10B10A2Pesce20

Etc.

- Specular / Exponent MapCalver03HargreavesHarris04

- EmissiveCalver03HargreavesHarris04Pesce20

R8Pesce20

- Light MapHargreavesHarris04Lee09

- Material IDCalver03HargreavesHarris04LobanchikovGruen09

- RoughnessAndersson09Moradin19Pesce20

- R8Pesce20

- AOLobanchikovGruen09Moradin19

- GlossinessLee09LobanchikovGruen09Kaplanyan10

- 8bppKaplanyan10

- Non deferrableKaplanyan10

- Required at lighting accumulation pass

- Specular is non-accumulative otherwise

- Specular PowerEngelShaderX709EngelSiggraph09Lee09

- Motion VectorEngelShaderX709EngelSiggraph09

- Velocity MaskMoradin19

- ShadowEngelShaderX709EngelSiggraph09

- Specular TermsThibieroz11

- Sky MaskMoradin19

- Vertex NormalMoradin19

- MetalnessMoradin19Huseyin20Pesce20

- Reflectance (f0)Huseyin20

R8 UNORMHuseyin20

- SmoothnessHuseyin20

R8 UNORM

- TranslucencyPesce20

R8

Examples

Example 1: Beyond3DCalver03

| MRTs | R | G | B | A |

| RT 0 | Pos.X | Pos.Y | Pos.Z | ID |

| RT 1 | Norm.X | Norm.Y | Norm.Z | Material ID |

| RT 2 | Diffuse Albedo.R | Diffuse Albedo.G | Diffuse Albedo.B | Diffuse Term |

| RT 3 | Specular Emissive.R | Specular Emissive.G | Specular Emissive.B | Specular Term |

| Material Lookup texture |

|---|

| Kspecblend |

| KAmb |

| KEmm |

| … |

Example 2: Climax Studios GDC 2004 Hargreaves04

| MRTs | R | G | B | A |

| DS | Depth R32F | |||

| RT 0 | Norm.X R10F | Norm.Y G10F | Norm.Z B10F | Scattering A2F |

| RT 1 | Diffuse Albedo.R R8 | Diffuse Albedo.G G8 | Diffuse Albedo.B B8 | Emissive Term A8 |

| RT 2 (could be palettized) | Material Parameters R8 | Material Parameters G8 | Material Parameters B8 | Material Parameters A8 |

Example 3: ShaderX2Thibieroz04

| MRTs | R8 | G8 | B8 | A8 |

| RT 0 | Pos.X R16F | Pos.Y G16F | ||

| RT 1 | Pos.Z R16F | |||

| RT 2 | Diffuse Albedo.R R8 | Diffuse Albedo.G G8 | Diffuse Albedo.B B8 | Normal.Z A8 |

| RT 3 | Normal.X A8 | Normal.Y L8 | ||

Example 4: Killzone 2Valient07

| MRTs | R8 | G8 | B8 | A8 |

| DS | Depth 24bpp | Stencil | ||

| RT 0 | Lighting Accumulation.R | Lighting Accumulation.G | Lighting Accumulation.B | Intensity |

| RT 1 | Normal.X FP16 | Normal.Y FP16 | ||

| RT 2 | Motion Vectors XY | Spec-Power | Spec-Intensity | |

| RT 3 | Diffuse Albedo.R R8 | Diffuse Albedo.G G8 | Diffuse Albedo.B B8 | Sun-Occlusion A8 |

- Position computed from depth buffer and pixel coordinates

- Lighting accumulation - output buffer

- Intensity - luminance of Lighting accumulation

- Scaled to range [0…2]

- Normal.z = sqrt(1.0f - Normal.x2 - Normal.y2)

- Motion vectors - screen space

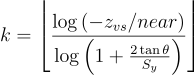

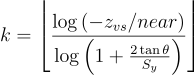

- Specular power - stored as log2(original)/10.5

- High range and still high precision for low shininess

- Sun Occlusion - pre-rendered static sun shadows

- Mixed with real-time sun shadow for higher quality

Analysis:

- Pros:

- Highly packed data structure

- Many extra attributes

- Allows MSAA with hardware support

- Cons:

- Limited output precision and dynamic range

- Lighting accumulation in gamma space

- Can use different color space (LogLuv)

- Attribute packing and unpacking overhead

- Limited output precision and dynamic range

Example 5: StarCraft IIFilionMcNaughton08

| MRTs | R | G | B | A |

| RT 0 | Unlit & Emissive R16G16B16F | Unused | ||

| RT 1 | Normal R16G16B16F | Depth | ||

| RT 2 | Diffuse Albedo.R | Diffuse Albedo.G | Diffuse Albedo.B | AO |

| RT 3 | Specular Albedo.R | Specular Albedo.G | Specular Albedo.B | Unused |

- Depth values for lighting, fog volumes, dynamic AO, smart displacement, DoF, projections, edge detection, thickness measurement

- Normals for dynamic AO

- Diffuse and specular for lighting

Example 6: S.T.A.L.E.R: Clear SkiesLobanchikovGruen09

S.T.A.L.K.E.R. originally used a 3-RT G-Buffer:

- 3D Pos + material ID (RGBA16F RT0)

- Normal + AO (RGBA16F RT1)

- Color + Gloss (RGBA8 RT2)

S.T.A.L.E.R: Clear Skies:

- Normal + Depth + Material ID + AO (RGBA16F RT0)

- Pack AO and material ID into the usable bits of the last 16 bit fp channel of RT0

- Pack data into a 32bit

uintas a bit pattern that is a valid 16bit fp number - Cast the

uintto float usingasfloat() - Cast back for unpacking using

asuint() - Extract bits

- Pack data into a 32bit

- Pack AO and material ID into the usable bits of the last 16 bit fp channel of RT0

- Color + Gloss (RGBA8 RT1)

- Trade packing math vs. Less G-Buffer texture ops

Example 7: Split/SecondKnightRitchieParrish11

| MRTs | R | G | B | A |

| RT 0 | Diffuse Albedo.R | Diffuse Albedo.G | Diffuse Albedo.B | Specular amount |

| RT 1 | Normal.X | Normal.Y | Normal.Z | Motion ID + MSAA edge |

| RT 3 | Prelit.R | Prelit.G | Prelit.B | Specular power |

Example 8: Crysis 3SousaWenzelRaine13

| MRTs | R | G | B | A |

| DS | Depth D24 | AmbID, Decals S8 | ||

| RT 0 | Normal.X R8 | Normal.Y G8 | Gloss, Z Sign B8 | Translucency A8 |

| RT 1 | Diffuse Albedo.Y R8 | Diffuse Albedo.Cb, .Cr G8 | Specular Y B8 | Per-Project A8 |

- WS Normal packed into 2 components

- Stereographic projection worked ok in practice (also cheap)

- (X, Y) = (x / (1 - z), y / (1 - z))

- (x, y, z) = (2X / (1 + X2 + Y2), 2Y / (1 + X2 + Y2), (-1 + X2 + Y2) / (1 + X2 + Y2))

- Glossiness + Normal Z Sign packed together

- GlossZsign = (Gloss * Zsign) * 0.5 + 0.5

- Albedo in Y’CbCr color space

- Y’ = 0.299 × R + 0.587 × G + 0.114 × B

- CB = 0.5 + (-0.168 × R - 0.331 × G + 0.5 × B)

- CR = 0.5 + (0.5 × R - 0.418 × G - 0.081 × B)

- R = Y’ + 1.402 × (CR - 0.5)

- G = Y’ - 0.344 × (CB - 0.5) - 0.714 × (CR - 0.5)

- B = Y’ - 1.772 × (CB - 0.5)

Example 9: DestinyTatarchukTchouVenzon13

| MRTs | R | G | B | A |

| RT 0 | Diffuse Albedo.R R8 | Diffuse Albedo.G G8 | Diffuse Albedo.B B8 | AO A8 |

| RT 1 | Normal.X * (Biased Specular Smoothness) R8 | Normal.Y * (Biased Specular Smoothness) G8 | Normal.Z * (Biased Specular Smoothness) B8 | Material ID A8 |

| DS | Depth D24 | Stencil S8 | ||

Example 10: inFAMOUS: Second SonBentley14

| MRTs | R | G | B | A |

| RT 0 | Diffuse Albedo.R R8 | Diffuse Albedo.G G8 | Diffuse Albedo.B B8 | Shadow Refr A8 |

| RT 1 | Normal.α R16 | Normal.β G16 | Vertex Normal.α B16 | Vertex Normal.β A16 |

| RT 2 | Sun Shadow R8 | AO G8 | Spec Occl B8 | Gloss A8 |

| RT 3 | Wetness Params RGBA8 | RT 4 | Ambient Diffuse.R R16F | Ambient Diffuse.G G16F | Ambient Diffuse.B B16F | Amb Atten A16F | </tr>

| RT 5 | Emissive.R R16F | Emissive.G G16F | Emissive.B B16F | Alpha A16F |

| D32f | Depth D24 | |||

| S8 | Stencil S8 | |||

Example 11: RyzeSchulz14

| MRTs | R | G | B | A |

| RT 0 | Normal.X R8 | Normal.Y G8 | Normal.Z B8 | Translucency Luminance / Prebaked AO Term A8 |

| RT 1 | Diffuse Albedo.R R8 | Diffuse Albedo.G G8 | Diffuse Albedo.B B8 | Subsurface Scatering Profile A8 |

| RT 2 | Roughness R8 | <td colspan="3"style="background-color:rgba(127, 255, 255, 0.5); color:black">Specular YCbCr / Transmittance CbCr GBA8</td>

- Normals encoded using BFN approach to avoid 8 bit precision issues

- Specular color stored as YCbCr to better support blending to GBuffer (e.g. decals)

- Allow blending of non-metal decals despite not being able to write alpha during blend ops

- Can still break when blending colored specular (rare case that was avoided on art side)

- Specular chrominance aliased with transmittance luminance

- Exploiting mutual exclusivity: colored specular just for metal, translucency just for dielectrics

- Support for prebaked AO value but was just used rarely in the end

Example 12: Uncharted 4ElGarawany16

- 16 bits-per-pixel unsigned buffers

- Constantly moving bits around between features during production

- Lots of visual tests to determine exactly how many bits were needed for the various features

- Heavy use of GCN parameter packing intrinsics

| Channels | G-Buffer 0 | Channels | G-Buffer 1 |

|---|---|---|---|

| R | r g | R | ambientTranslucency sunShadowHigh specOcclusion |

| G | b spec | G | heightmapShadowing sunShadowLow metallic |

| B | normalx normaly | B | dominantDirectionX dominantDirectionY |

| A | iblUseParent normalExtra roughness | A | ao extraMaterialMask sheen thinWallTranslucency |

- A third optional G-Buffer is used by more complicated materials

- Interpreted differently based on the type of the material

- Fabric, hair, skin, silk, etc.

Example 13: Jurassic World: EvolutionTheCodeCorsairJWE21

- Tiled Forward Lighting

- 8 × 8 pixel tiles extruded towards the far plane to create subfrustums

- CS is dispatched per tile

- Depth Prepass

- Thin GBuffer

| MRTs | R | G | B | A |

| RT 0 | Normal.X R | Normal.Y G | Normal.Z B | Roughness A |

| RT 1 | Motion Vectors | |||

Example 14: Mafia: Definitive EditionTheCodeCorsairMDE21

| MRTs | R | G | B | A |

| RT 0 | Normal.X R16F | Normal.Y G16F | Normal.Z B16F | Roughness A16F |

| RT 1 | Diffuse Albedo.R R8 | Diffuse Albedo.G G8 | Diffuse Albedo.B B8 | Metalness A8 |

| RT 2 | Motion Vectors RGB16U | Encoded Vertex Normal A16U | ||

| RT 3 | Specular Intensity R8 | 0.5 G8 | Curvature or Thickness (for SSS) B8 | SSS Profile A8 |

| RT 4 | Emissive.R R11F | Emissive.G G11F | Emissive.B B10F | |

Example 15: Digital Combat SimulatorPoulet21

- Five

R8G8_UNORMlayers with MSAA activated- Normal using a basic encoding scheme

- Store X and Y components and reconstructing the Z

- Albedo is stored across three channels encoded using YUV

- First channel of the second layer contains the Y

- First and second channel stores the U and V

- Roughness in the first channel, metalness in the second channel

- Precomputed AO provided by texture in the first channel

- Normal using a basic encoding scheme

- Normal encoding example from SSAO:

ld_ms(texture2dmsarray)(float,float,float,float) r1.zw, r5.xyww, GBufferMap.zwxy, l(0)

ld_ms(texture2dmsarray)(float,float,float,float) r0.w, r5.xyzw, GBufferMap.yzwx, l(0)

mad r1.zw, r1.zzzw, l(0.0000, 0.0000, 2.0000, 2.0000), l(0.0000, 0.0000, -1.0000, -1.0000)

add r5.x, r1.w, r1.z

add r5.z, -r1.w, r1.z

mul r5.xz, r5.xxzx, l(0.5000, 0.0000, 0.5000, 0.0000)

add r1.z, abs(r5.z), abs(r5.x)

add r5.y, r1.z, l(-1.0000)

dp3 r1.z, r5.xyzx, r5.xyzx

rsq r1.z, r1.z

mul r5.xyz, r1.zzzz, r5.xyzx

ge r0.w, l(0.5000), r0.w

movc r5.w, r0.w, r5.y, -r5.y

Example 16: UnityLagardeGolubev18

| MRTs | R | G | B | A |

| RT 0 (sRGB) | BaseColor.R R8 | BaseColor.G G8 | BaseColor.B B8 | Specular Occlusion A8 |

| RT 1 | Normal.xy (Octahedral 12/12) RGB8 | Perceptual Smoothness A8 | ||

| RT 2 | Material Data RGB8 | FeaturesMask(3) / Material Data A8 | ||

| RT 3 | Static diffuse lighting R11G11B10F | |||

| RT 4 (Optional) | Extra specular occlusion data RG8 | Ambient Occlusion B8 | Light Layering Mask | |

| RT 5 (Optional) | 4 Shadow Masks RGBA8 | |||

Overview

- Don’t bother with any lighting while drawing scene geometryHargreaves04

- Render to a “fat” framebuffer format, using MRT to store dataHargreaves04

- Drawback of fat-format encoding is the reading speedShishkovtsov05

- Apply lighting as a 2D postprocess, using these buffers as inputHargreaves04

Example Passes

Example 1: UnityLagardeGolubev18

Opaque Material Render Pass

- Depth Prepass

- GBuffer

- Tag stencil for regular lighting or split lighting

- Render Shadow

- Async Light list generation + Light/Material classification

- Async SSAO (Use Normal buffer)

- Async SSR (Use Normal buffer)

- Deferred directional cascade shadow

- (Use Normal buffer for normal shadow bias)

- Tile deferred lighting

- Indirect dispatch for each shader variants

- Read stencil

- No lighting: skip forward material and sky

- Regular lighting: output lighting

- Split lighting: separate diffuse and specular

- Read stencil

- Indirect dispatch for each shader variants

- Forward Opaque

- (Optional) Output BaseColor + Diffusion Profile

- (Optional) Output + Tag stencil for split lighting

- SS Subsurface Scattering

- Test stencil for split lighting

- Combine lighting

Geometry Phase

Each geometry shader is responsible for filling the G-Buffers with correct parameters.Calver03

The major advantage over the conventional real-time approach to Renderman style procedural textures is that the entire shader is devoted to generating output parameters and that it is run only once regardless of the number or types of lights affecting this surface (generating depth maps also requires the geometry shaders to be run but usually with much simpler functions).Calver03

Another advantage is that after this phase how the G-Buffer was filled is irrelevant, this allows for impostors and particles to be mixed in with normal surfaces and be treated in the same manner (lighting, fog, etc.).Calver03

Some portions of the light equation that stay constant can be computed here and stored in the G-Buffer if necessary, this can be used if you light model uses Fresnel (which are usually only based on surface normal and view directional).Calver03

Killzone 2Valient07

Fill the G-Buffer with all geometry (static, skinned, etc.)

Write depth, motion, specular, etc. properties

Initialize light accumulation buffer with pre-baked light

Ambient, Incandescence, Constant specular

Lightmaps on static geometry

YUV color space, S3TC5 with Y in Alpha

Sun occlusion in B channel

Dynamic range [0...2]

Image based lighting on dynamic geometry

Optimizations

Export Cost

- Render objects in front-to-back orderThibieroz11

- Use fewer render targets in your MRT configThibieroz11

- Less fetches during shading passes

- Less memory usage

- Avoid slow formatsThibieroz11

- Data PackingThibieroz11

- Trade render target storage for a few extra ALU instructions

Light Accumulation PassValient07

- Light is rendered as convex geometry

- Point light - sphere

- Spot light - cone

- Sun - full-screen quad

For each light:

Find and mark visible lit pixels

If light contributes to screen

Render shadow map

Shade lit pixels and add to framebuffer

Lighting Phase

The real power of deferred lighting is that lights are first class citizens, this complete separation of lighting and geometry allows lights to be treated in a totally different way from standard rendering. This makes the artist’s job easier as there is less restrictions on how lights affect surfaces, this allows for easy customizable lighting rigs.Calver03

Light shaders have access to the parameters stored in the G-Buffer at each pixel they light.Calver03

Add lighting contributions into accumulation bufferThibieroz11

- Use G-Buffer RTs as inputs

- Render geometries enclosing light area

Render convex bounding geometry

Read G-Buffer

Compute radiance

Blend into frame buffer

- Keep diffuse and specular separate

For each light:

diffuse += diffuse(GBuffer.N, L)

specular += GBuffer.spec * specular(GBuffer.N, GBuffer.P, L)

- Final full-screen pass modulates diffuse color:

framebuffer = diffuse * GBuffer.diffuse + specular

Per-Sample Pixel Shader Execution:Thibieroz09

struct PS_INPUT_EDGE_SAMPLE

{

float4 Pos : SV_POSITION;

uint uSample : SV_SAMPLEINDEX;

};

// Multisampled G-Buffer textures declaration

Texture2DMS <float4, NUM_SAMPLES> txMRT0;

Texture2DMS <float4, NUM_SAMPLES> txMRT1;

Texture2DMS <float4, NUM_SAMPLES> txMRT2;

// Pixel shader for shading pass of edge samples in DX10.1

// This shader is run at sample frequency

// Used with the following depth-stencil state values so that only

// samples belonging to edge pixels are rendered, as detected in

// the previous stencil pass.

// StencilEnable = TRUE

// StencilReadMask = 0x80

// Front/BackFaceStencilFail = Keep

// Front/BackfaceStencilDepthFail = Keep

// Front/BackfaceStencilPass = Keep;

// Front/BackfaceStencilFunc = Equal;

// The stencil reference value is set to 0x80

float4 PSLightPass_EdgeSampleOnly( PS_INPUT_EDGE_SAMPLE input ) : SV_TARGET

{

// Convert screen coordinates to integer

int3 nScreenCoordinates = int3(input.Pos.xy, 0);

// Sample G-Buffer textures for current sample

float4 MRT0 = txMRT0.Load( nScreenCoordinates, input.uSample);

float4 MRT1 = txMRT1.Load( nScreenCoordinates, input.uSample);

float4 MRT2 = txMRT2.Load( nScreenCoordinates, input.uSample);

// Apply light equation to this sample

float4 vColor = LightEquation(MRT0, MRT1, MRT2);

// Return calculated sample color

return vColor;

}

Conventional Deferred ShadingLauritzen10:

- For each light

- Use rasterizer to scatter light volume and cull

- Read lighting inputs from G-Buffer

- Compute lighting

- Accumulate lighting with additive blending

- Reorders computation to extract coherence

Modern ImplementationLauritzen10:

- Cull with screen-aligned quads

- Cover light extents with axis-aligned bounding box

- Full light meshes(spheres, cones) are generally overkill

- Can use oriented bounding box for narrow spot lights

- Use conservative single-direction depth test

- Two-pass stencil is more expensive than it is worth

- Depth bounds test on some hardware, but not batch-friendly

- Cover light extents with axis-aligned bounding box

for each G-Buffer sample

{

sampleAttr = load attributes from G-Buffer

for each light

{

color += shade(sampleAttr, light)

}

output pixel color;

}

uniform vec3 lightPosition;

uniform vec3 lightColor;

uniform float lightRange;

void main()

{

vec3 color = texelFetch(colorTex, gl_FragCoord.xy);

vec3 specular = texelFetch(specularTEx, gl_FragCoord.xy);

vec3 normal = texelFetch(normalTex, gl_FragCoord.xy);

vec3 position = fetchPosition(gl_FragCoord.xy);

vec3 shading = doLight(position, normal, color,

specular, lightPosition,

lightColor, lightRange);

resultColor = vec4(shading, 1.0);

}

Red Dead Redemption 2:Huseyin20

- Global light pass

- Fullscreen quad

- Local light pass

- Low-poly sphere shape for point light volumes

- Octahderon like shape for spotlight volumes

- Rendered back-to-front with additive blending

Plus(+) Methods: Algorithm Steps:Drobot17

- List of rendering entities

- Spatial acceleration structure with culled entity lists

- Execution algorithm per sampling point

- Traverse acceleration structure

- Iterate over existing entities

- Aka Tiled / Clustered Forward+ / Deferred+

Lighting Optimizations:LagardeGolubev18

- Focus on removing false positives

- Ex: narrow shadow casting spot lights

- False positives are more expensive in lighting pass

- Light culling execute async during shadow rendering

- List building work is absorbed by leveraging asynchronous compute

- Deferred lighting pass is not running async

- Final lighting shader has higher loop complexity and greater register pressure

- Move cost where it can be hidden

- High register pressure in lighting pass

- Light culling execute async during shadow rendering

- Hierarchical approach:

- Find screen-space AABB for each visible light

- Big tile 64 × 64 prepass

- Coarse intersection test

- Build Tile or Cluster Light list

- Narrow intersection test

- Tile:

- Based on Fine Prune Tile Lighting (FPTL)

- Build FTPL light list for tile 16 × 16

- Fine pruning: test if any depth pixel is in volume

- Aggressive removal of false positives

- One light list per tile. Allows attribute to be read into scalar registers

- Cluster:

- 32 × 32 with 64 clusters

- Use geometric series for cluster position and size

- Half of cluster (32) consumes between near and max per tile depth

- Good resolution in visible range

- Permit queries behind max per tile depth

- Particles, volume, FX

Bandwidth ProblemOlsson15

- New type of overdraw

- Light overdraw

- N lights cover a certain pixel

- N reads from the same G-Buffer location

for each light

for each covered pixel

read G-Buffer // repeated reads

compute shading

read + write frame buffer // repeated reads and writes

- Re-write loop!

for each pixel

read G-Buffer

for each affecting light

compute shading

write frame buffer

- Modern shading solution:

for each pixel

read G-Buffer

for each possibly affecting light

if affecting

compute shading

write frame buffer

- Share between groups of similar pixels

- Lots of coherency between samples

- Coherent access

- Little storage

- Conservatice lists

Pre-Tiled Shading

Advantages:

- Precise per-pixel light cullingZhdan16

- A lot of work is done outside of the shader Weaknesses:

- Massive overdraw & ROP cost when having lots of big light sourcesAndersson11Zhdan16

- Expensive to have multiple per-pixel materials in light shadersAndersson11

- MSAA lighting can be slow (non-coherent, extra bandwidth)Andersson11

- Lighting is likely to become bandwidth limitedZhdan16

Full screen lights

For lights that are truly global and have no position and size (ambient and directional are the traditional types), we create a full screen quad that executes the pixel shader at every pixel.Calver03Hargreaves04

Global directional lights has little benefit in using deferred rendering methods on them, and it would actually be slower to resample the deferred buffers again for the entire screen.FilionMcNaughton08

Shaped lights

Shaped lights can be implemented via a full screen quad in exactly the same way of directional lights just with a different algorithm computing the lights direction and attenuation, but the attenuation allows us to pre-calculate where the light no longer makes any contribution.Calver03

OptimizationCalver03

The attenuation model I use is a simple texture lookup based on distance. The distance is divided by the maximum distance that the light can possible effect and then this is used to lookup a 1D texture. The last texel should be 0, (no constant term) if the following optimisations are to be used.

OptimizationPlaceres06

Shade only the pixels influenced by the bounding object involves rendering a full screen quad, but enabling clipping and rejection features to discard many noninfluenced pixels. This requires dynamic branching.

Light Volumes

We create a mesh that encloses the light affecting volume with any pixels found to be in the interior of the volume executing the light shader.Calver03Hargreaves04

- Each pixel most be hit once and once only. If the light volume causes the light shader to be executed more than once it will be equivalent to having n lights affecting this pixel.Calver03

- The near and far clip planes must not effect the projected shape. We need the projected geometry not to be clipped at the near and far plane as this will cause holes in our lights.Calver03

- Spot LightHargreavesHarris04

- Cone

- Point LightHargreavesHarris04

- Sphere

- Direction LightHargreavesHarris04

- Quad or Box

For convex volumes the first problem is completely removed by just using back or front face culling.Calver03Hargreaves04

We can’t remove the near plane, but we can effectively remove the far plane by placing it at infinity.Calver03

Convex volumes cover the vast majority of lights shaders (e.g. spheres for point lights, cones for spotlights, etc.) and we can adapt them to use the fast z-reject hardware that is usually available.Calver03

Dealing with the light volume rendering:Hargreaves04

- Camera is outside the light bounding mesh

- Simple back face culling (each pixel most be hit once and once only)

- Camera is inside the light bounding mesh

- Draw backfaces

- Light volume intersects the far clip plane

- Draw frontfaces

- Light volume intersects both near and far clip planes

- Light is too big

Optimizations

S.T.A.L.K.E.R case:Shishkovtsov05

- Hierarchical occlusion culling system

- Coarsest test: Sector-portal culling followed by CPU-based occlusion culling

- DX9’s occlusion query to eliminate the completely occluded lights

- Stencil mask to tag affected pixels for each light

- Sun optimization

Pass 0: Render full-screen quad only where 0x03==stencil count

(where attributes are stored)

If ((N dot L) * ambient_occlusion_term > 0)

discard fragment

Else

color = 0, stencil = 0x01

Pass 1: Render full-screen quad only where 0x03==stencil count

Perform light accumulation / shading

- Social Stage:Placeres06

- Filter the lights and effects on the scene to produce a smaller list of sources to be processed

- Execute visiblity and occlusion algorithms to discard lights whose influence is not appreciable

- Project visible sources bounding objects into screen space

- Combine similar sources that are too close in screen space or influence almost the same screen area

- Discard sources with a tiny contribution because of their projected bounding object being too small or too far

- Check that more than a predefined number of sources do not affect each screen region. Choose the biggest, strongest, and closer sources.

- Filter the lights and effects on the scene to produce a smaller list of sources to be processed

- Individual Stage:Placeres06

- Global Sources

- Most fill-rate expensive

- Enable the appropriate shaders

- Render a quad covering the screen

- Most fill-rate expensive

- Local Sources

- Select the appropriate level of detail.

- Enable and configure the source shaders

- Compute the minimum and maximum screen cord values of the projected bounding object

- Enable the scissor test

- Enable the clipping planes

- Render a screen quad or the bounding object

- Global Sources

- Only shade the area where the light volume intersects scene geometryHargreaves04 Only shade surfaces inside light volume!!HargreavesHarris04

- Light volume Z Tests

- Drawing light volume backfaces

- Use

D3DCMP_GREATERto reject “floating in the air” portions of the light

- Use

- Drawing frontfaces

- Use

D3DCMP_LESSto reject “buried underground” light regions

- Use

- Drawing light volume backfaces

- Tabula Rasa uses “greater” depth test and “clockwise” winding(inverted winding)Koonce07

- Light volumes in Tabula Rasa never get clipped by the far clip plane

- Light volume Z Tests

- StarCraft II case:FilionMcNaughton08

- Early-Z to reduce cost of hidden lights

- Early stencil to reduce cost of objects behind light that are not lit by it

Other optimizations:

- ClippingHargreavesHarris04

- Occlusion queryHargreavesHarris04

- Z-CullHargreavesHarris04

Stencil Cull

- Render light volume with color write disabledHargreavesHarris04

- Depth Func = LESS, Stencil Func = ALWAYS

- Stencil Z-FAIL = REPLACE (with value X)

- Rest of stencil ops set to KEEP

- Render with lighting shaderHargreavesHarris04

- Depth Func = ALWAYS, Stencil Func = EQUAL, all ops = KEEP, Stencil Ref = X

- Unlit pixels will be culled because stencil will not match the reference value * Only regions that fail depth test represent objects within the light volumeHargreavesHarris04

Killzone 2 case:Valient07

- Marks pixels in front of the far light boundary

- Render back-faces of light volume

- Depth test GREATER-EQUAL

- Write to stencil on depth pass

- Skipped for very small distant lights

- Find amount of lit pixels inside the volume

- Start pixel query

- Render front faces of light volume

- Depth test LESS-EQUAL

- Don’t write anything - only EQUAL stencil test

- Rendering:

- Render front-faces of light volume

- Depth test - LESS-EQUAL

- Stencil test - EQUAL

- Runs only on marked pixels inside light

- Render front-faces of light volume

- If light is “very small”

- Don’t do any stencil marking

Light Shader Occlusion Optimisations

The basis of using occlusion culling with light shaders is that the depth buffer used for the creation of the G-Buffer is available at no cost (this is only true if the resolution of the G-Buffer is the same as destination colour buffer and that we are using the same projection matrix for the geometry shaders and light shaders).Calver03

I simply turn off the occlusion culling if the light shader hits the near plane and just render the back faces without depth testing. Its means some pixels run the pixel shader unnecessarily but it’s very cheap on the CPU and the actual difference is usually only a few pixels.Calver03

Accessing Light Properties

- Avoid using dynamic constant buffer indexing in pixel shaderThibieroz11

- Generates redundant memory operations repeated for every pixel

ex)

struct LIGHT_STRUCT

{

float4 vColor;

float4 vPos;

};

cbuffer cbPointLightArray

{

LIGHT_STRUCT g_Light[NUM_LIGHTS];

};

float4 PS_PointLight(PS_INPUT i) : SV_TARGET

{

// ...

uint uIndex = i.uPrimIndex / 2;

float4 vColor = g_Light[uIndex].vColor; // NO!

float4 vLightPos = g_Light[uIndex].vPos; // NO!

}

- Instead fetch light properties from CB in VS (or GS)

- And pass them to PS as interpolants

- No actual interpolation needed

- Use

noninterpolationto reduce number of shader instruction

PS_QUAD_INPUT VS_PointLight(VS_INPUT i)

{

PS_QUAD_INPUT Out = (PS_QUAD_INPUT)0;

// Pass position

Out.vPosition = float4(i.vNDCPosition, 1.0);

// Pass light properties to PS

uint uIndex = i.uVertexIndex / 4;

Out.vLightColor = g_Light[uIndex].vColor;

Out.vLightPos = g_Light[uLightIndex].vPos;

return Out;

}

struct PS_QUAD_INPUT

{

nointerpolation float4 vLightColor : LCOLOR;

nointerpolation float4 vLightPos : LPOS;

float4 vPosition : SV_POSITION;

};

Tiled Shading

Amortizes overheadLauritzen10.

- Advantages:

- Fastest and most flexibleLauritzen10Olsson15

- Enable efficient MSAALauritzen10Olsson15

- G-Buffers are read only once for each lit sampleOlssonBilleterAssarsson13

- Framebuffer is written to onceOlssonAssarsson11OlssonBilleterAssarsson13

- Common terms of the rendering equation can be factored out and computed once instead of recomputing them for each lightOlssonAssarsson11OlssonBilleterAssarsson13

- Work becomes coherent within each tileOlssonAssarsson11OlssonBilleterAssarsson13

- Each sample in a tile requires the same amount of work

- Allows for efficient implementation on SIMD-like architectures

- Each sample in a tile requires the same amount of work

- Low bandwidthOlsson15

- Simple light assignmentOlsson15

- Trivial light list lookupOlsson15

- High performanceOlsson15

- TransparencyOlsson15

- Constant & absolute minimal bandwithAndersson09OlssonAssarsson11

- Read gbuffers & depth once!

- Doens’t need intermediate light buffersAndersson09

- Can take a lot of memory with HDR, MSAA & color specular

- Scales up to huge amount of big overlapping light sourcesAndersson09

- Fine-grained culling (16 × 16)

- Only ALU cost, good future scaling

- Could be useful for accumulating VPLs

- Light accumulatino is done in register, at full floating point precisionOlssonAssarsson11

- Lighting phase takes all visible lights in one goZhdan16

- Disadvantages:

- Still tricky to afford many shadowed lights per pixelPesce14Olsson15, Makes dynamic shadows harderPesce14

- No shadow map reuseOlsson15

- Complex light shaderOlsson15

- View dependenceOlsson15

Requires DX 11 HWAndersson09CS 4.0 / 4.1 difficult due to atomics & scatteredgroupsharedwrites

- Culling overhead for small light sourcesAndersson09

- Can accumulate them using standard light volume rendering

- Or separate CS for tile-classific

- Potentially performanceAndersson09

- MSAA texture loads / UAV writing might be slower then standard PS

- Can’t output to MSAA textureAndersson09

- DX11 CS UAV limitation

- Less accurate culling with tile granularityZhdan16

- Frustum-primitive tests are either too coarse or too slowZhdan16

- Still tricky to afford many shadowed lights per pixelPesce14Olsson15, Makes dynamic shadows harderPesce14

- Challenges:

- Frustum primitive culling not accurate, creates false positivesSchulz14Zhdan16

- Often considerably more pixels shaded than with stencil tested light volumes

- Handling light resources (all resources need to be accessible from CS)Schulz14

- Shadow maps stored in large atlas

- Diffuse and specular probe cubemaps stored in texture arrays

- Projector textures stored in texture array (have to use standardized dimensions and format)

- Keeping GPRs under controlSchulz14

- Dynamic branching for different light types

- Deep branching requires additional GPRs and lower occupancy

- Had to manually rearrange code to stay within desired GPR limit

- Frustum primitive culling not accurate, creates false positivesSchulz14Zhdan16

- Divide the screen into a gridBalestraEngstad08Andersson11WhiteBarreBrisebois11OlssonBilleterAssarsson13

- (Optional) Find min / max Z-bounds for each tileOlssonBilleterAssarsson13

- Find which lights intersect each cellBalestraEngstad08Andersson11OlssonBilleterAssarsson13

- +How many lightsAndersson09

- Render quads over each cell calculating up to 8 lights per passBalestraEngstad08

- Results in a light buffer

- Only apply the visible light sources on pixels in each tileAndersson09Andersson11OlssonBilleterAssarsson13

Algorithm:OlssonAssarsson11

- Render the (opaque) geometry into the G-BuffersStewartThomas13

- Ordinary deferred geometry pass

- Store material propertiesStewartThomas13

- Provides tile depth boundsStewartThomas13

- Construct a screen space grid, covering the frame buffer, with some fixed tile size, t = (x, y), e.g. 32 × 32 pixelsWhiteBarreBrisebois11StewartThomas13

- (Optional) Find min / max Z-bounds for each tileOlssonBilleterAssarsson13

- For each light: find the screen space extents of the light volume and append the light ID to each affected grid cellOlssonBilleterAssarsson13StewartThomas13

- Find the screen space extents of the light bounding sphere and then insert the light into the covered grid cellsOlssonAssarsson11

- Store the culling results in a texture:WhiteBarreBrisebois11

- Column == Light ID

- Row == Tile ID

- Store the culling results in a texture:WhiteBarreBrisebois11

- Cull analytical lights (point, cone, line), per tileWhiteBarreBrisebois11StewartThomas13

- Compute shaderStewartThomas13

- Generates per-tile light listStewartThomas13

- Same compute shader then can use per-tile list to do shadingStewartThomas13

- Can sort lights by kind to reduce branchingBentley14

- Find the screen space extents of the light bounding sphere and then insert the light into the covered grid cellsOlssonAssarsson11

- For each fragment in the frame buffer, with location f = (x, y)